Keyphrases:

Language Models. RNN. Bi-directional RNN. Deep RNN. GRU. LSTM.

Language Models

Language models compute the probability of occurrence of a number of words in a particular sequence.

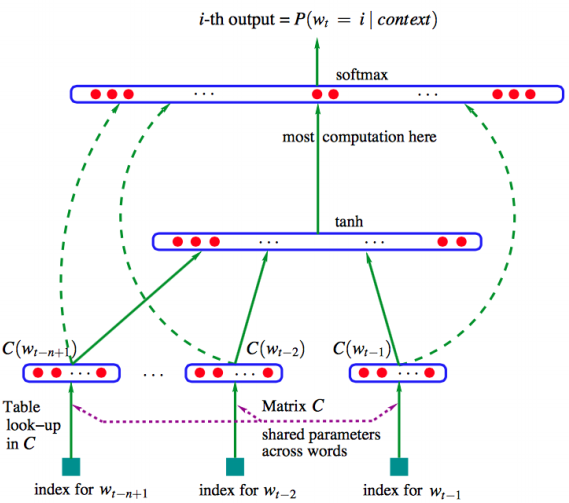

In some cases, the window of past consecutive n words may not be sufficient to capture the context.Bengio et al. introduced the first large-scale deep learning for natural language processing model that enables capturing this type of context via learning a distributed representation of words.

In all conventional language models, the memory requirements of the system grows exponentially with the window size n making it nearly impossible to model large word windows without running out of memory.

Recurrent Neural Networks (RNN)

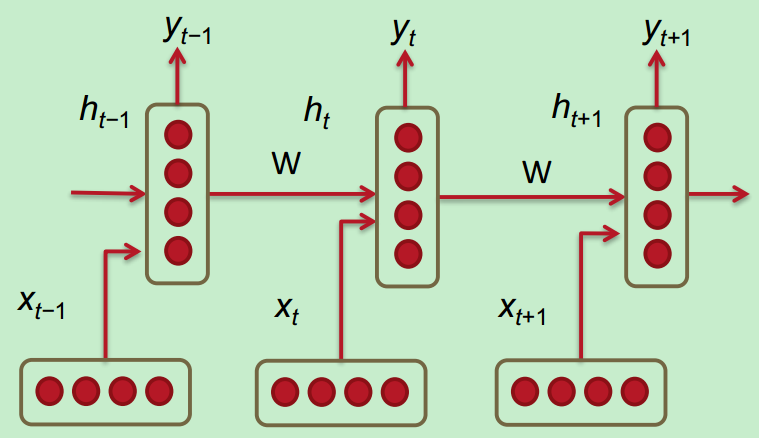

Recurrent Neural Networks (RNN) are capable of conditioning the model on all previous words in the corpus.

Below are the details associated with each parameter in the network:

- $x_1,…,x_t,…,x_T$: the word vectors corresponding to a corpus with T words

- $h(t)=\sigma(W^{(hh)}h_{t-1}+W^{(hx)}x_{t}})$: the relationship to compute the hidden layer output features at each time-step t

- $\hat{y_t}=softmax(W^{(S)}h_t)$: the output probability distribution over the vocabulary at each time-step t

The loss function used in RNNs is often the cross entropy error.

Perplexity relationship; it is basically 2 to the power of the negative log probability of the cross entropy error.Perplexity is a measure of confusion where lower values imply more confidence in predicting the next word in the sequence.

The amount of memory required to run a layer of RNN is proportional to the number of words in the corpus.

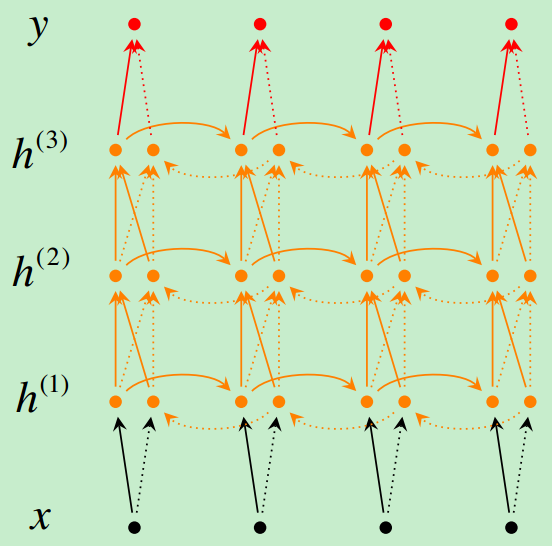

Deep Bidirectional RNNs

To make predictions based on future words by having the RNN model read through the corpus backwards

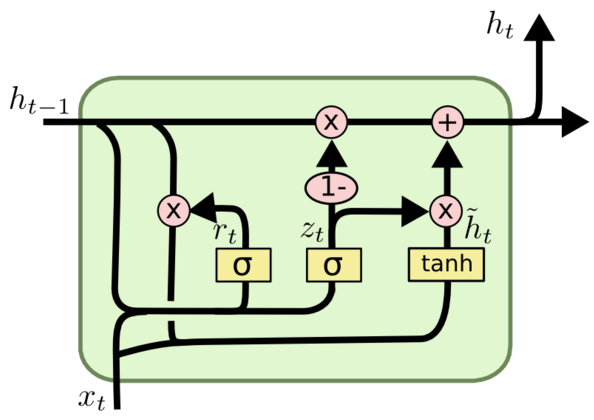

Gated Recurrent Units

Although RNNs can theoretically capture long-term dependencies, they are very hard to actually train to do this. Gated recurrent units are designed in a manner to have more persistent memory thereby making it easier for RNNs to capture long-term dependencies.

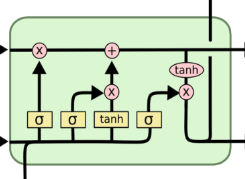

Long-Short-Term-Memories